November 10, 2023 | Channing Lovett

Introduction of AI in Data Centers: Demystifying the Impact

The rise of artificial intelligence (AI) has sparked mixed feelings in both consumers and businesses. On one hand, there’s excitement about how AI can improve processes and user experiences. On the other hand, there are challenges around the introduction of AI in data centers, and the greater demands such resource-intensive workloads will put on these facilities.

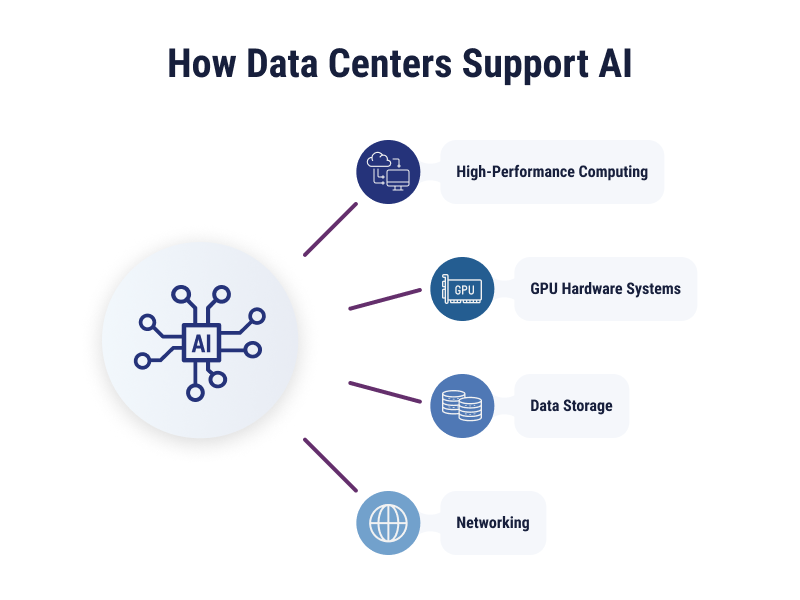

Why Are Data Centers Essential for Supporting Artificial Intelligence?

The AI global market is currently valued at $150.2 billion, and data center operations need to be able to keep up with the dramatic growth as well as evolving needs. Afterall, they provide the necessary computational power and storage capacity for the training and deployment of complex models. Without these facilities, the processing demands of AI would overwhelm individual machines and networks, making centralized data centers essential for the development, deployment, scalability, and efficiency of AI applications.

High-Performance Computing

High-density colocation configurations, often featuring specialized cabinets, have become necessary to accommodate the demands of high-performance computing (HPC) and AI workloads. These environments rely on specialized hardware due to their intense processing requirements which, in turn, rely on more extensive cooling and power requirements than less complex workloads. High-density colocation data centers are engineered to withstand and support the requirements of these demand-intensive workloads.

GPU Hardware Systems

When it comes to accelerating AI workloads, GPUs are a necessity. Many AI workloads can benefit from parallel processing, something that is enabled by GPUs. These processors can also speed up the inference and training process of AI systems.

Because GPUs enable more intense processing, they also have higher power and cooling requirements. Data centers need to be able to handle the amount of heat generated and cooling needed to keep GPUs running at peak performance and to prevent premature breakdown of the equipment.

Data Storage

AI workloads, which encompass language learning and other applications, also require large amounts of data. The models are trained on extensive data and need to pull from the data during the inference process, making storage needs, especially high-performance storage, paramount. All-flash storage is becoming a bigger priority for data centers to accommodate the data as a result. This storage also needs to quickly scale to adapt to changing workload needs.

Networking

Distributed, high-speed, low-latency networks are important for AI workloads to perform at their expected level. Data centers need to have high-speed connections, such as 100 Gbps or 400 Gbps ethernet, as well as low-latency networking from solutions like InfiniBand and RoCE.

Power Consumption of AI Data Centers

Growing needs for storage, networking, and hardware also mean that AI increases the power consumed by IT equipment. Data centers need to be equipped for the power density required and may even have to retool data center designs to house these requirements.

Power Density

Power consumed per unit area of a data center is expressed as the power density. This is closely related to rack density, which describes how much IT equipment is stored on a single rack. AI data centers have both high power density and high rack density. They’re using specialized hardware that consumes a lot of power and requires more hardware in general to operate AI models.

Data center designs need to accommodate the high power density of AI workloads through liquid cooling, modular data centers and racks, and cloud data centers. High-density colocation data centers should also include specialized cabinets designed to accommodate power loads up to 85kW.

Higher power density also means data centers need to meet higher levels of:

- Cooling

- Power distribution

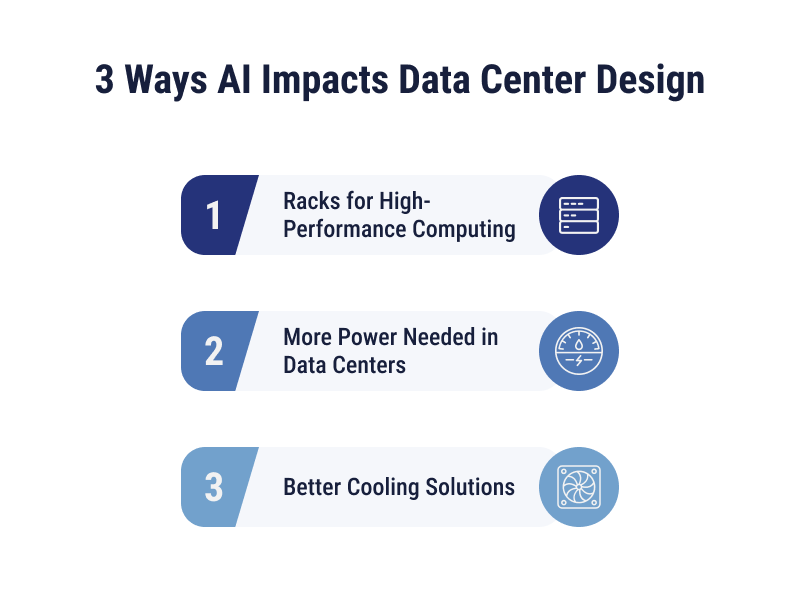

How AI Might Impact Data Center Design

The question isn’t “how AI might impact data center design” but really, how is it directly impacting the design and redesign of data centers today? The demands from AI don’t just mean data centers need to be larger to house the workloads – this new technology can even influence and alter data center design, from new rack types to new cooling methods, and more.

Racks for High-Performance Computing

Racks for high-performance computing can look different from traditional data center racks. Because they are designed to support cooling requirements and higher power density of AI workloads, they can have larger fans, more air vents, and use more efficient cooling systems, such as liquid cooling. Sixty percent of respondents in the AFCOM State of the Data Center Report say their rack density will continue to change.

Rack design may also be different based on the provisioning and deployment needed. Modular racks are pre-fabricated racks used in data centers for quick deployment and support of AI workloads.

More Power (Megawatts) Needed in Data Centers

An increased focus on AI also means an increased need for more power density. AI workloads are compute-intensive and need specialized hardware, including GPUs and TPUs, which consume more power compared to CPUs. The racks used for this equipment also need to be able to handle the additional heat and power both produced and consumed.

Better Cooling Solutions

AI workloads require more sophisticated and efficient cooling methods. Liquid cooling can be used in data center racks with high power densities, such as the ones used to support artificial intelligence.

Cooling Requirements of AI Data Centers

Artificial intelligence puts greater demand on the power and density of a data center, and with that comes increased reliance on effective cooling systems. Liquid cooling, whether done through immersion or direct techniques, and as part of an evaporative or closed-loop system, can support these new workloads while being more sustainable in the process.

Immersion Cooling and Direct Cooling

Immersion cooling is where IT equipment is submerged in a non-conductive liquid. The liquid removes the heat from the equipment and is moved continuously through a heat exchanger for cooling.

Direct cooling is where liquid is directly circulated to the CPU or GPU chip before being passed through a heat exchanger. The liquid removes heat directly at the source of generation, which can be done with either an open- or closed-loop system.

Evaporative Cooling and Closed-Loop Cooling

With evaporative cooling, the evaporation of water or a non-conductive liquid is used to remove heat from a system. Evaporative cooling and closed-loop cooling are not mutually exclusive from immersion or direct cooling techniques.

Closed-loop cooling uses a series of tubes and pipes to deliver coolant or water to IT equipment, making it a more efficient and sustainable method compared to evaporative cooling.

Adapting to AI Using a High-Density Colocation Data Center with TierPoint

If your company is rapidly innovating, and you’re worried about your current data center’s ability to support your HPC or AI workloads, consider moving to a high-density colocation data center. TierPoint’s high-density colocation data centers offer state-of-the-art cabinets and are built to deliver a robust power infrastructure, modern and efficient closed-loop cooling, and a secure and scalable space for your ever-growing environment.

Learn more about our high-density colocation services and how they can accommodate your complex workloads.